A brief history

Historically, most of our website platform projects involved building simple, public-facing websites. Most sites were just a couple of page types, with different blocks. Some of them had a few bespoke features such as a forum, survey form, or some sort of payment gateway. Not overly complicated, just enough to provide a decent user experience.

The majority of these sites weren’t very resource-intensive, so we would rent a shared server and host all of the websites on it. It was then a case of placing a Virtual-Host file on that server to direct traffic for a domain or a particular directory. As these sites weren’t resource-intensive it was completely fine for them to share the server’s resources.

It definitely wasn’t ideal but it worked reasonably well and was cost-effective. Perfect for the small business websites we were building.

Mo’ projects, mo’ problems…

Fast forward a few years the type of sites we were starting to build began to change. We started getting bigger projects for large-sized and enterprise clients, from government departments, councils to non-profits to internal enterprise intranets to saas products.

These projects had much higher requirement standards than a brochure website for the local retail store and more complex business logic. For some of these builds, particularly the ones that required a tender submission, we also had to guarantee a certain amount of uptime each year as well as ensuring that the hosting solution was secure.

We started to encounter more problems with a shared hosting setup. Resource-intensive sites would often hog all of the memory and cause issues for the other sites (we cannot confirm or deny that one or two sites might have crashed). Cost versus scalability also became a cause for concern as there is only so much CPU and RAM that can be added before it becomes too expensive to maintain.

But why not just rent or hire professional managed hosting services? Oh, but we did…

We encountered several issues going down this route, especially when it came to relying on these third parties to install software packages (some were just unreliable and others did not even offer this as part of their service). The support response time was also quite lackluster if it was not an emergency. If the site had not crashed, the turnaround time on support requests could take up to two weeks.

Cloud experimentation

Amongst all of that, there was also some experimentation with self-hosting on other cloud providers, specifically AWS EC2 and AWS Beanstalk. This experiment turned out to be a bit of a failure as developers were spending more time undertaking infrastructure maintenance instead of adding business value to the client.

The hunt for a solution

After encountering all of these issues we decided to put a checklist of what we needed in order to optimise these solutions. We came to the following:

- We needed a simple and scalable infrastructure

- A robust deployment and rollback to ensure our developers had a good experience

- Arguably the most important feature, being able to self-service and not having to rely on a third party

- The ability to backup, monitor, and log everything.

Taking everything into consideration we knew we needed a different approach, a cloud-native approach.

What is a Cloud-Native approach?

To put it simply, a cloud-native approach is an approach in software development that utilises cloud computing to build and run scalable applications in modern, dynamic environments such as public, private, and hybrid clouds.

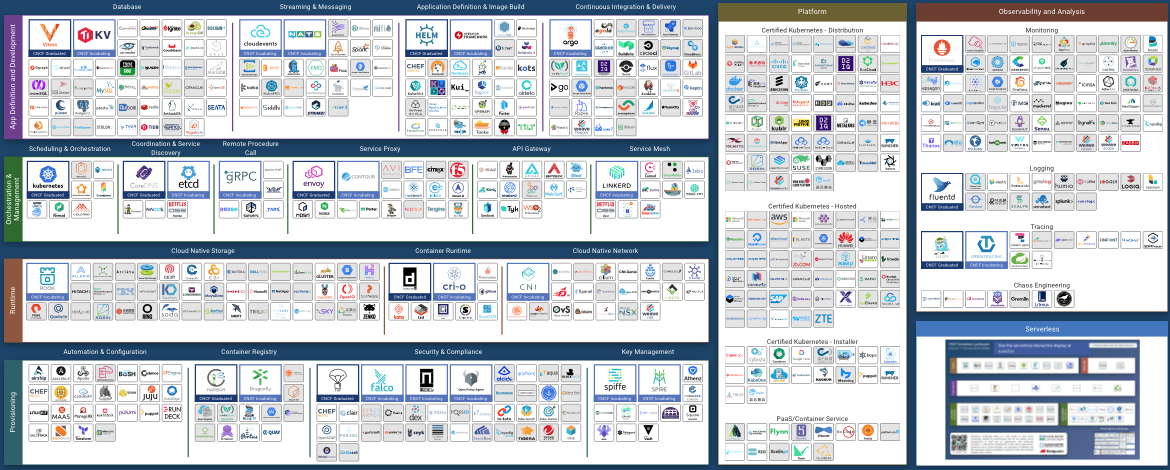

To put it not so simply, this blog banner maps out a cloud-native landscape. It is certainly a complex task to map out.

Five Principles of a Cloud-Native approach

We chose the cloud-native approach as its core principles were key to solving many of the issues we had started to encounter. Ots five basic principles include:

- Automation: Automate everything that you can

- Stateless components: Make components of your infrastructure stateless, where you can destroy and rebuild if needed and the state is immutable

- Managed services: Use managed services where they exist to save time and operational overhead

- Sperate but together: Design components can be standalone, secure components but work together where needed

- The only constant is change: Cloud-native architecture needs to always evolve and you should try to always simplify and improve the architecture of what you currently have where and when you can

These principles seemed to tick off a few things we were looking for when deciding how to build our future platforms.

Cloud-native buzzwords

There are a couple of buzzwords that pop up regularly when people talk about a cloud-native approach/landscape. The two big ones being serverless and fully managed services.

Fun fact – serverless doesn’t actually mean there are no servers. It refers to eliminating a layer of infrastructure management where the provider is responsible for all the server infrastructure while developers only have to worry about the code that runs on those services. Serverless services typically can autoscale as needed and you just pay for what you use.

As for fully managed services, it usually refers to not having to worry about server management. The cloud provider is responsible for:

- Configuring hardware

- Scaling when additional resources are needed

- Maintaining and applying security patches where needed so that everything is kept up to date

It enables developers to focus on business value and write the code the way they want without having to worry about configuring and maintaining the underlying infrastructure that runs your code.

So now that we have chosen an approach and had some experience with cloud platforms, all we had to do was pick one.

Google Cloud Platform (surprise, surprise)

Yes, we’re a Google Partner that chose the Google Cloud Platform – surprise, surprise. The dev team did genuinely enjoy the experience as compared to their previous encounters with Azure and AWS.

What is Google Cloud Platform?

To be nerdy about it, Google Cloud Platform (GCP) is a suite of cloud computing services that runs on the same infrastructure that Google uses internally for its end-user products, such as Google Search, Gmail, Google Drive, and YouTube.

Alongside a set of management tools, it provides a series of modular cloud services including computing, data storage, data analytics, and machine learning. GCP provides infrastructure as a service, platform as a service, and serverless (there’s that word again) computing environments.

Google Cloud Platform is a part of Google Cloud, which includes the Google Cloud Platform public cloud infrastructure, as well as Google Workspace (G Suite), enterprise versions of Android and Chrome OS, and application programming interfaces (APIs) for machine learning and enterprise mapping services.

But really, why GCP?

We chose GCP because the real place though where we think GCP shines is that it covers the whole modern digital experience which is more than just your website hosting.

For example, after a website goes live and an organisation matures, Martech and Adtech start playing a lot more important role in an organisation’s digital maturity strategy.

GCP does really well in the Adtech and Martech space with their analytics, big data, machine learning tools such as Google Analytics, BigQuery, Looker for crunching and analysing terabytes of data. It just made logical sense to offer the GCP solution to clients.

Future builds

A cloud build isn’t necessary for every website build, however, depending on your CMS, martech, and adtech stacks, building in or moving to the cloud can provide a myriad of benefits.

It can not only make the life of developers easier but will also enable you to have better integration of your other GMP products, which in turn will give you a more sophisticated understanding of your customers. This will ultimately lead to improving your customer’s overall experience.